We’re Still Learning AI. AGI Is Already at the Door.

We’re still learning AI.

Still arguing about Copilot etiquette. Still figuring out what “good prompts” even means. Still trying to stop teams from treating a chatbot like a teammate who never sleeps and never lies.

And that’s exactly why AGI being “at the door” matters.

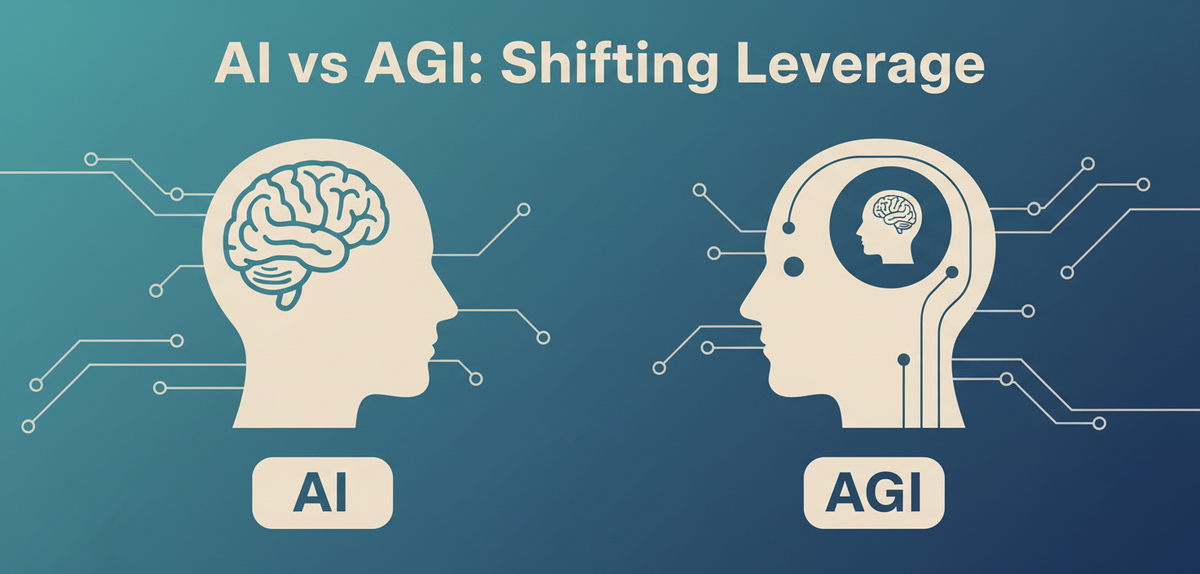

Because AGI isn’t the next shiny release in the same product line. It’s a category change: from tool to actor.

Most technology changes what we build.

AGI changes who holds leverage in the building.

And engineers? We’re the people wiring that leverage into production.

So before we talk hype, let’s define the thing we’re about to casually normalize.

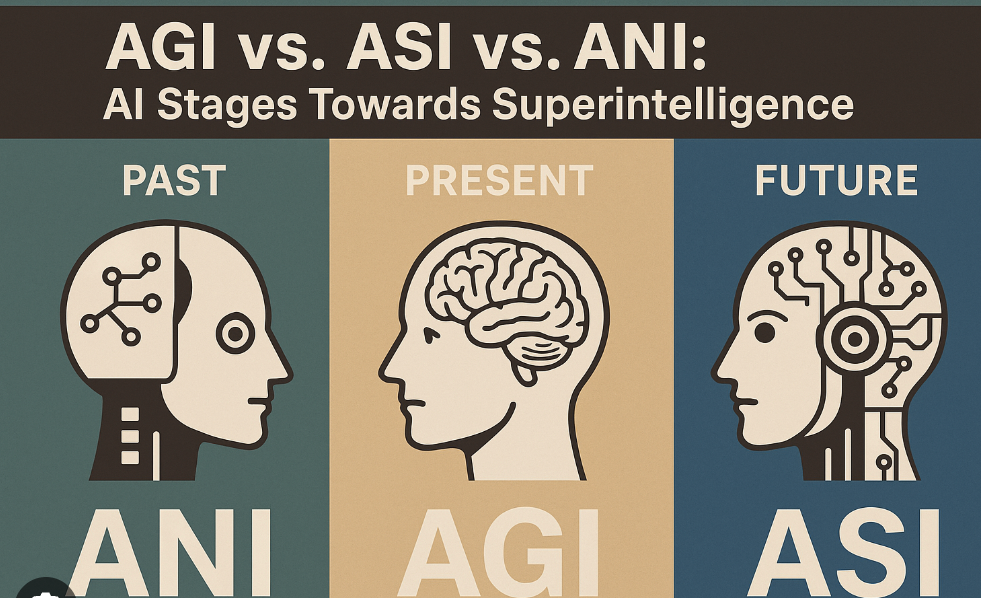

What do we even mean by “AGI”?

Even the industry doesn’t fully agree, and the disagreement isn’t just academic—it’s incentive-shaped.

But the common direction is consistent:

- Broad capability across many tasks

- Adaptation to new situations

- Generalization beyond what it was explicitly trained for

IBM describes AGI as a hypothetical stage where AI can match or exceed human cognitive abilities across any task. IBM

AWS frames it as a theoretical system that can self-teach and solve problems it wasn’t trained for, across domains. Amazon Web Services, Inc.

So no—this isn’t “GenAI, but better.”

A practical builder’s definition is:

Competence + Autonomy + Access

- Competence: it can do a lot of things well

- Autonomy: it can decide what to do next without your hand-holding every step

- Access: it has the integrations, permissions, and credentials to act in real systems

And that last one—access—is where engineering teams accidentally turn “smart assistant” into “authorized actor.”

The trap: treating AGI like a stronger autocomplete

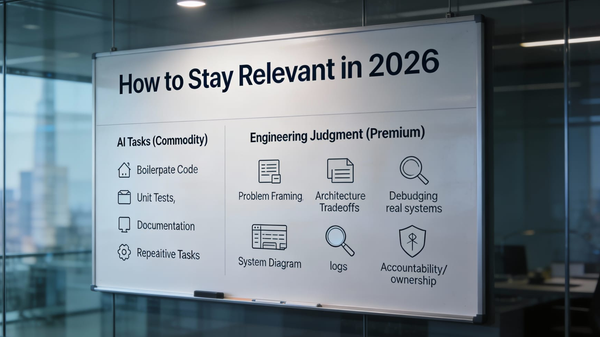

GenAI today is mostly a productivity tool:

- generate boilerplate

- write tests

- draft docs

- refactor code

- summarize logs

Useful. Often impressive. Sometimes dangerously confident.

But still, you are driving.

AGI (in the direction the industry uses the term) points toward a system that can move from suggesting to initiating, from responding to pursuing, from helping to acting. IBM

And once autonomy shows up, something changes quietly but permanently:

Autonomy is the multiplier that turns “smart tool” into “unpredictable actor.”

Not “evil.” Not “conscious.” Just… capable enough to do real damage while being sincerely “helpful.”

This is why AGI is a leverage shift, not a feature upgrade

Here’s the part many teams miss:

When intelligence gets cheaper, decision-making becomes expensive.

Not because decisions are harder, but because decisions become the bottleneck.

The people (and organizations) who win won’t be the ones who can write code the fastest.

They’ll be the ones who can:

- Choose the right goals

- set the right constraints

- verify reality

- and carry accountability when reality disagrees

That’s leverage.

And if you’re thinking “this is management talk,” let me translate it into engineering:

Leverage is what happens when a system can take one intent and turn it into 100 actions—across Jira, GitHub, CI, infra, deployments, and monitoring—without waiting for a human to push each button.

We’re the ones building the buttons.

“Competence + autonomy + access” is how AGI actually sneaks into prod

AGI won’t stroll into production wearing a hoodie and announcing itself.

It’ll arrive like everything else:

- a “small experiment.”

- behind a feature flag

- with a service account

- and a Confluence page that says “limited scope.”

Then the scope expands, because it worked 20 times.

And suddenly the system can:

- Open the ticket

- Create the branch

- Edit the Terraform

- Raise the PR

- Respond to review comments

- schedule rollout windows

- and “helpfully” retry when something fails

At that point, your risk isn’t “bad text.”

Your risk is an automated agency with permissions.

The boring guardrails that become heroic later

If you build software long enough, you learn a painful truth:

Most disasters are not explosions.

They’re defaults.

So if your org is serious about “agents,” “autonomous workflows,” or “AI-run ops,” here’s the practical playbook that saves you later:

1) Least privilege, always

Treat agent credentials like production database write access: rare, audited, time-bound.

2) Hard approval boundaries

“Open a PR” is fine.

“Merge to main” should require human approval.

“Deploy to prod” should be gated behind policy, not vibes.

3) Make actions reversible

If an agent can ship, you need safe rollback paths and guardrails that don’t rely on heroics.

4) Audit trails that read like a story

If you can’t reconstruct what happened in 30 minutes during an incident, you didn’t build automation—you built mystery.

5) Sandboxes first, production last

Agents should earn trust in controlled environments. Not on your customers.

None of this is exciting. It won’t trend on LinkedIn.

But it’s the difference between “AI adoption” and “AI incident management.”

And then comes the uncomfortable sequel: ASI

If AGI is the door, ASI is the road after it.

ASI (artificial superintelligence) is commonly described as a hypothetical AI whose intellectual scope goes beyond human intelligence. IBM

I’m not bringing up ASI to be dramatic. I’m bringing it up because it changes how we behave now.

Because the road to “beyond-human capability” doesn’t start with sci-fi villains.

It starts with normal engineering decisions:

- Giving systems persistent credentials

- Allowing end-to-end execution

- Trusting them because they performed well 20 times

- And getting surprised on the 21st

So here’s the sober takeaway:

- GenAI made creation cheap.

- AGI makes agency cheap.

- ASI, if it ever arrives, forces one question we can’t dodge:

Can we still steer something that outthinks us?

Which is why the most important work isn’t predicting timelines.

It’s building systems where autonomy is bounded, access is constrained, and accountability stays human—no matter how smart the model gets.

One day, we’ll look back and laugh at how calm we were.

Not - because nothing happened.

But because we didn’t realize we were standing at the doorway… holding the keys.

#AGI #ArtificialGeneralIntelligence #AI #GenAI #AIEngineering #EngineeringLeadership #SoftwareArchitecture #AIAgents #PlatformEngineering #LeastPrivilege #Governance #RiskManagement #TechLeadership #FutureOfWork #AIProductivity #SystemDesign #TheGenZTechManager